Introduction to Gender-Based Online Hate and Deepfakes

- 9 mins

I initially created this workshop for the Hate To Hope Online Course, but my aim is to make it accessible to all my readers. Even if you aren't directly involved with students or young adults, you may find some of these activities and academic resources valuable. After all, we all encounter online hate, and we all know someone who may become its target.

The Context

Social workers have a duty to pursue social justice, including understanding the mechanics of hate in young adults. Recognizing the formation of hate equips us to address root causes and prevent harm, especially given the heightened risks faced by adolescents and young adults due to their age, socioeconomic status, and limited experience.

While the University of Calgary's Bachelor of Social Work program has made progress in identifying structural barriers affecting the general population, a critical gap exists in understanding the intersection of young adults and online radicalization. Hence, it is essential for social workers and professionals working with this demographic to engage in constructive dialogues on these pressing issues.

This discussion is part of a broader topic on radicalization. The insights gained from this lesson plan should serve as a catalyst for discussions concerning government repatriation of individuals who have joined ISIS. Furthermore, it will foster more nuanced discussions surrounding groups like Hamas and other religious militant or extremist organizations.

Current Challenges

While it's crucial to help victims of online hate, it's equally vital to view the perpetrators as products of a larger system. The first challenge is fostering empathy and understanding for guardians and social workers dealing with individuals engaged in gender-based online violence, often perpetrated by men. This includes online sexual harassment, bullying, and intimidation through various means, like text, video, and AI.

The gender dynamic between the worker and young adult complicates this challenge. Most social workers are women, which can lead to resistance from clients, particularly male ones, due to misogyny or a perceived lack of relatability. Encouraging more male social workers or teachers as role models could be a solution, but this may not be immediately practical and could perpetuate unequal gender dynamics. It's crucial to emphasize that females shouldn't bear the sole responsibility for correcting male behavior. The course aims to raise awareness of this issue and its unique challenges.

The second challenge relates to AI-based hate, specifically deep-fake pornography used as a weapon against ex-partners or public figures. Legislative complexities surround this issue, necessitating open discussion. The final challenge involves raising awareness of how social media algorithms contribute to the formation of echo chambers.

Lesson Plan Overview

Title: Introduction to Gender-Based Online Hate and Deepfakes

Objective: By the end of this session, participants will demonstrate an understanding of how social media algorithms can exacerbate gender-based online hate.

Materials Required: This course can be conducted either in-person or online, but participants must have internet access for listening to podcasts and reading articles. Additionally, paper or a whiteboard will be utilized for in-class activities and reflections.

Description of Learners: This course is open to anyone, including high school students, but its primary focus is on social work students. It can be seamlessly integrated into a policy class or can function as a standalone independent course.

Duration of Lesson: The course can either be spread out over 4-5 weeks or completed within a week, depending on the instructional approach.

Week 1: Introduction to Hate Speech Laws

Pre-lesson Assignment: Find an article covering an incident of violence caused by femicide or gender-based violence. In your own words, write a paragraph summarizing the incident.

- Questions to answer: How easy was it to find an article about the topic of "femicide"? Does the article link terrorist activity to any online activities? If so, how does the news article frame or label the online activities/group?

Class Activity: Instead of simply presenting their findings, each group will engage in a mock legislative debate. Assign each group a different country with its own unique hate speech laws (Belgium, Estonia, France, Canada, Germany, Japan, and South Africa). The goal is to simulate a discussion where they propose and defend their country's approach to hate speech regulation. This hands-on approach encourages deeper understanding and critical thinking.

Student Reflection: After the mock legislative debate, challenge students to consider the complexities and nuances of applying hate speech laws to real-world situations. In pairs or small groups, discuss how the strictest laws might impact freedom of speech and the potential unintended consequences. Encourage students to think beyond the immediate situation and explore the broader implications of regulating hate speech in a global context. This exercise promotes a more comprehensive understanding of the challenges surrounding hate speech legislation.

Lesson: The instructor will lead a discussion on the challenges of defining hate speech, including the efforts made by the European Union and technology companies to establish rules. Explore the four possible definitions of hate speech: teleological, consequentialist, formal definition, and consensus definition. The lesson will refer to Hietanen and Eddebo's article.

Hietanen, M., & Eddebo, J. (2022). "Towards a Definition of Hate Speech—With a Focus on Online Contexts." The Journal of Communication Inquiry. https://doi.org/10.1177/01968599221124309

Group activity: students are given real-world examples of speech or online content. They must categorize each example as potential hate speech under a broad definition or as excluded under a narrow definition on a T-chart. This exercise encourages group discussion while addressing the complexities of defining hate speech. Groups then present their selected examples and reasoning to the class, leading to an in-depth class discussion on the challenges of hate speech classification using real-life examples as starting points.

Week 2: Fostering Empathy

Pre-lesson Assignment: Prior to the class, students watch Aaron Stark's Ted Talk discussing his near experience as a school shooter. They evaluate how access to an online safe space might have impacted his situation, considering both potential support and risks associated with such spaces.

Class Activity: Divide the class into five groups, each focusing on a different protective factor topic: economics, gender, physical (including race and ability), religion, and sexuality. These groups discuss both the protective factors preventing individuals from falling into online radicalization and the associated risk factors. They then present their findings to other groups, promoting comprehensive understanding.

Lesson: In the first part of the lesson, the instructor delves into the formation of online subcultures known as incels, applying a feminist lens to analyze media reporting on incel stories. The lesson draws from Byerly's article as a source, emphasizing the critical role of media portrayal in shaping perceptions.

In the latter part of the class, a deep dive into incel online forums reveals recruitment methods and community dynamics, highlighting the separation from mainstream social media platforms. The instructor explores the double-edged nature of these communities and discusses how algorithms may both mitigate issues and introduce new challenges. This segment references Pelzer's article as a source.

Byerly, C. M. (2020). "Incels Online: Reframing Sexual Violence." The Communication Review, 23(4), 290–308. https://doi.org/10.1080/10714421.2020.1829305

Pelzer, B., Kaati, L., Cohen, K., & Fernquist, J. (2021). "Toxic Language in Online Incel Communities." SN Social Sciences, 1(8). https://doi.org/10.1007/s43545-021-00220-8

Group Activity: Divide the class into three groups, each assigned to a topic: organizations, social media channels, and online forums. These groups identify positive influences such as public figures, social media accounts, or websites that offer dating or sexual health advice. Compile these findings on a whiteboard and share them on social media using relevant hashtags while tagging relevant figures, promoting awareness of constructive resources.

Week 3: Tools to Counter Online Radicalization

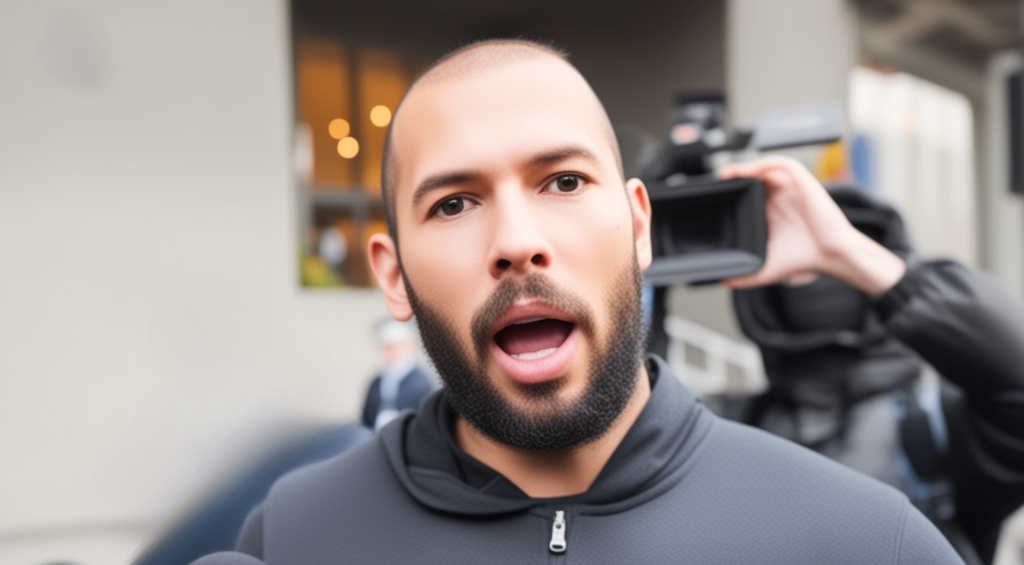

Pre-lesson Assignment: Ahead of the class, students listen to Vox's "Today Explained" episode on Andrew Tate, delving into how figures like him target male insecurity. They identify risk factors that might make someone susceptible to his rhetoric and consider a feminist perspective's insights on his rise. Additionally, students explore Vox Media's potential biases.

Class Activity: Engage in a devil's advocate exercise, with trigger warnings as needed. Challenge the class to brainstorm strategies for recruiting marginalized individuals and driving them towards online radicalization. Encourage students to search for online entities, including websites, Discord forums, YouTube or TikTok channels, and groups that target marginalized communities.

Lesson: The instructor elucidates the concepts of echo chambers and the algorithms behind social media applications. Reference "Confronting Conspiracy Theories and Organized Bigotry at Home - A Guide for Parents and Caregivers" (pages 21-22) to address handling anti-democratic groups and overt hate. Emphasize key points from pages 8-12, which discuss the challenges of navigating edgy content, algorithms, and understanding teenage behavior. Stress the importance of not labeling groups, avoiding cutting off online connections, and promoting logical engagement with young individuals.

Group Activity: Have students read pages 17-18 of the guide, which offers insights on how parents should address kids sharing edgy jokes or memes. Organize pairs to role-play as a parent and child, using guide examples to set scenarios and formulate responses. This interactive exercise encourages understanding and effective communication within families regarding online content.

Week 4: AI and Deepfakes

Pre-lesson Assignment: Students are tasked with finding examples of deepfake images or videos online. They reflect on the potential impact of having a deepfake of themselves publicized, considering which types of deepfake content would have the most significant consequences in their lives and why.

Class Activity: The class is divided into four groups, each focused on exploring how deepfakes could disrupt or compromise safety and stability within distinct domains: government, non-profit organizations, corporations, and healthcare. This exercise encourages thoughtful analysis and discussion of deepfake threats across various sectors.

Lesson: The instructor delves into the weaponization of deepfakes, targeting vulnerable individuals and the challenges of verifying video authenticity. Emphasize emerging technologies like digital watermarks and the consequences of eroding institutional trust due to misinformation. Reference Farid's article as a source.

Farid, H. (2021). "The Weaponization of Deep Fakes: Threats and Responses." The Journal of Intelligence, Conflict, and Warfare, 4(2), 87–94. https://doi.org/10.21810/jicw.v4i2.3720

Group Activity: Groups generate lists of preventive measures against deepfake creation and strategies for responding to deepfakes, including support for victims of deepnudes or sexual deepfakes.

Final Assessment: Students are tasked with producing an essay or reflection outlining potential laws and policies applicable in educational institutions, organizations, or government settings. They must explain how these policies can mitigate deepfake and online radicalization issues while also addressing the potential pitfalls that could disproportionately affect marginalized groups.

Personal Reflection of the Workshop

Each week in the workshop addresses a different issue, offering a glimpse or a taste test of various sub-topics. However, a drawback is that each topic is only covered in one week, even though they could each warrant a full-semester course. It's important to acknowledge that certain topics, such as deep fakes and roleplaying activities, may potentially trigger trauma in some participants. While alternative approaches to these lessons may exist, for now, a warning will be issued before each class begins. Participants will also have the option to review the curriculum in advance, allowing them to opt out of specific activities or lessons if necessary.

It's worth noting that this is a short course, and participants interested in these topics will need to engage in further learnings. Additionally, it's essential to recognize that terms like "terrorist" are loaded and carry significant connotations. Instructors must exercise caution and make an effort to use neutral terms when discussing sensitive subjects. Lastly, there is controversy surrounding the idea of sympathizing or empathizing with incel terrorists. It's crucial to acknowledge our biases and privilege when discussing this topic.

Edited Header Image Courtesy of Mihai Barbu/Getty Images